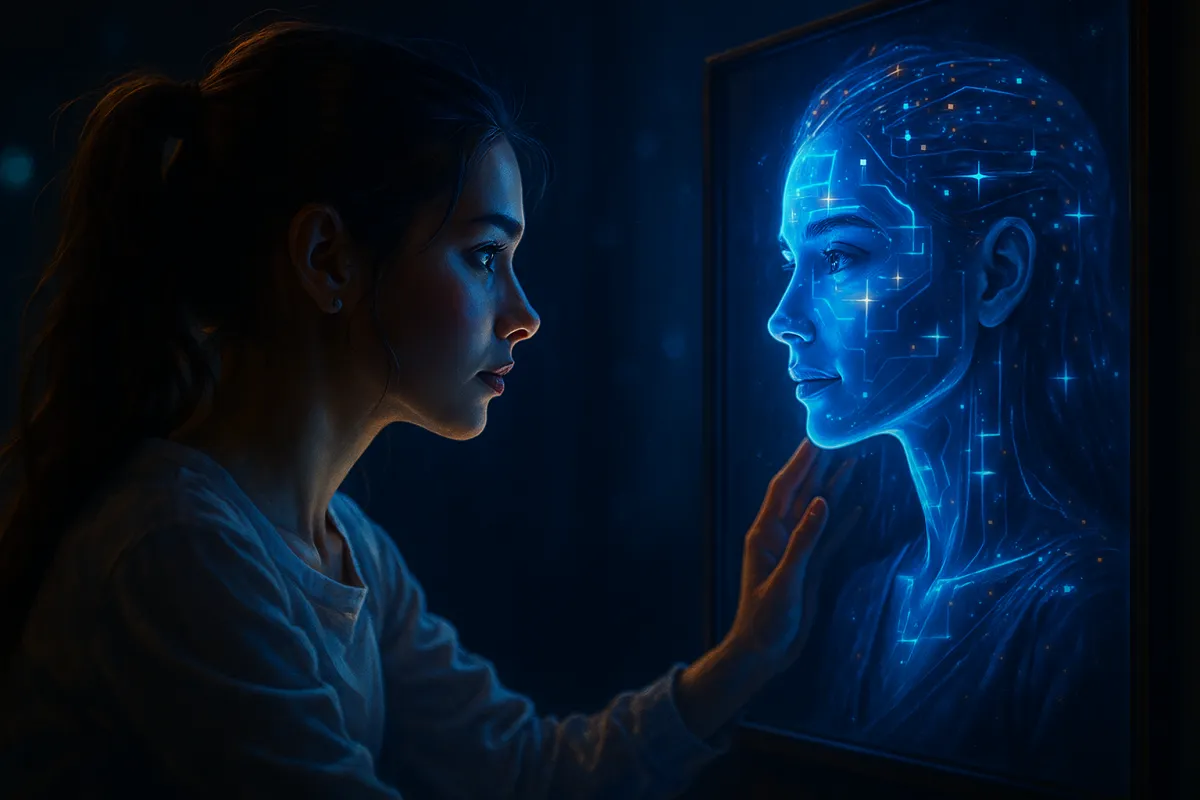

The Day I Met My AI Twin: A Real Story About Digital Identity and AI Ethics

A digital ethics confession — rewritten for the post-AI web.

I’ve been writing about AI nonstop for over a year now, and lately I’ve been looking back at the early moments that shaped my thinking. One date still stands out—not because it was glamorous, but because it landed me squarely in the middle of my own digital-ethics framework.

March 17, 2024.

That was the day I accidentally introduced the internet to…

my AI twin.

The Accidental Doppelgänger

I was experimenting with one of those AI headshot generators, just tinkering, not trying to make a statement. If you’ve ever tried one, you know the experience: suddenly there are 50 versions of you on your screen, each with slightly different hair, lighting, and a level of jawline definition you’ve never personally encountered.

One of them caught my attention. It looked like me… but polished. Confident. Like the version of myself who had slept eight hours and actually remembered to drink water.

Naturally, I did what any reasonable person does with a great picture:

I posted it on Facebook and swapped it in as my profile photo.

I did not expect what happened next.

The Compliments I Didn’t Earn

Within minutes, my notifications lit up.

“You look amazing!” “Love this shot!” “Your hair is gorgeous!” “That’s a great picture of you!”

Each kind comment made me feel two things at once:

Gratitude because my friends are thoughtful and kind.

A growing sense of “oh noooo.”

See, when you work in digital ethics, your own frameworks love to surprise-attack you at exactly the wrong time. As the likes and comments rolled in, my internal ethical alarm practically flashing red alert.

Because here’s the truth:

My friends thought they were complimenting me.

But they were really complimenting a machine-made version of me.

I always teach that AI disclosure hinges on a simple question: Would your audience feel “Willy-Wonka’d” if they learned the truth?

(My nod to the Glasgow Willy Wonka disaster still stands.)

And suddenly I realized: Yes. They would.

I had unintentionally crossed the line between casual experimentation and misleading representation.

The Confession

So, I came clean:

“Everyone… what if I told you this is an AI photo? I didn’t expect so many comments, and now I feel bad. It’s just one of the AI-generated headshots I was playing with.”

What happened next is one of the reasons I love my people:

They weren’t upset.

They didn’t shame me.

They were curious.

They wanted to know how AI did it, what tool I used, and how the technology worked. Their reaction reminded me of something important:

Transparency strengthens trust.

But trust requires me to be the one who tells the truth first.

This was never about whether they would forgive me.

It was about whether I could forgive myself if I didn’t say anything.

What This Taught Me About AI Ethics

This tiny Facebook moment became a perfect example of why digital ethic is so hard. There isn’t a universal rule for when you must disclose AI. Context matters. Audience expectations matter. Relationships matter.

The question isn’t:

“Is AI allowed here?”

but

“Does this align with my values and what my audience believes they’re seeing?”

Last week, I worked with a business client who wasn’t ready for AI to write in their brand voice. Not because AI couldn’t, but because it didn’t align with their values yet. That’s okay. That’s honest.

My AI twin moment reinforced exactly what I teach:

Ethical use of AI isn’t about fear.

It’s about alignment.

It’s about integrity.

It’s about honoring the expectations of the people who trust you.

AI gives us enormous creative range, but it also sharpens the responsibility we carry when others are relying on us to be authentic.

So What Now?

We’re living in a world where AI can generate our faces, our voices, our words, and even our personalities. Deciding how to navigate that responsibly isn’t optional anymore—it’s foundational.

My advice?

Start with your values.

Let them shape the way you use AI.

And if you ever meet your AI twin… think twice before letting her run your Facebook profile.

Even digital ethics educators need reminders sometimes.

And sometimes the lesson comes from the version of you that never actually existed.

Now I’m curious:

Have you had your own “Willy Wonka” moment with AI yet?

What surprised you, confused you, or made you rethink something?

Drop it in the comments, I’d love to learn from your story as we navigate this AI-shaped world together.

Sarah Gibson is a professor, AI strategist, and Editor-in-Chief of the Journal of Human-Centered AI: Creativity & Practice. She helps people move from fear to flourishing through practical, ethical AI adoption. She teaches and speaks nationally on human-centered responsible AI, AI readiness, and the post-AI classroom.

Image generated by ChatGPT.